How do you design an LLM agent that decides by itself what to retailer in long-term reminiscence, what to maintain in short-term context, and what to discard with out hand-tuned heuristics or extra controllers? Can a single coverage be taught to handle each reminiscence sorts by means of the identical motion area as textual content era?

Researchers from Alibaba Group and Wuhan College have launched Agenttic Reminiscence (AgeMem), a framework that permits brokers in large-scale language fashions to discover ways to handle each long-term and short-term reminiscence as a part of a single coverage. Quite than counting on handwritten guidelines or exterior controllers, brokers use reminiscence instruments built-in into the mannequin’s motion area to determine when to retailer, retrieve, summarize, and neglect.

Why present LLM brokers are battling reminiscence

Most agent frameworks deal with reminiscence as two loosely coupled programs.

Lengthy-term reminiscence shops person profiles, activity data, and former interactions throughout classes. Brief-term reminiscence is the present context window, which holds lively dialogs and retrieved paperwork.

Current programs are designed to separate these two components. Lengthy-term reminiscence is dealt with by means of an exterior retailer, similar to a vector database with easy add and get triggers. Brief-term reminiscence is managed by search growth era, sliding home windows, or summarization schedules.

This separation creates a number of issues.

Lengthy-term and short-term reminiscence are optimized independently. Their interactions are usually not skilled end-to-end. Heuristics determine when to jot down to reminiscence and when to summarize. These guidelines are weak and miss uncommon however essential occasions. Including controllers and professional fashions will increase price and complexity of the system.

AgeMem removes exterior controllers and incorporates reminiscence operations into the agent coverage itself.

Reminiscence as a instrument within the agent’s motion area

AgeMem exposes reminiscence operations as instruments. At every step, the mannequin can challenge both common textual content tokens or instrument calls. Six instruments are outlined on this framework.

For long-term reminiscence:

ADD saves new reminiscence gadgets with content material and metadata. UPDATE modifies an present reminiscence entry. DELETE removes out of date or low worth gadgets.

For brief-term reminiscence:

RETRIEVE performs a semantic search on long-term reminiscence and inserts the retrieved merchandise into the present context. SUMMARY compresses the scope of the interplay into a brief abstract. FILTER removes context segments that aren’t helpful for future inference.

Interplay protocols have a structured format. Every step begins with a block that the mannequin privately infers. The mannequin then outputs a block containing a JSON checklist of instrument calls or a response for the person. Due to this fact, reminiscence results are usually not a aspect impact, however a first-class determination.

Three-step reinforcement studying for built-in reminiscence

AgeMem is skilled utilizing reinforcement studying in a means that mixes long-term and short-term reminiscence behaviors.

The state at time t contains the present dialog context, long-term reminiscence shops, and activity specs. The coverage selects both token or instrument invocation because the motion. The coaching trajectory for every pattern is split into three phases.

Stage 1, long-term reminiscence constructing: Brokers work together in an off-the-cuff atmosphere and later observe related data. Construct and preserve long-term reminiscence with ADD, UPDATE, and DELETE. At this stage, short-term context grows naturally. Stage 2, short-term reminiscence management beneath distraction: the short-term context is reset. Lengthy-term reminiscence persists. Brokers will now obtain related however pointless distracting content material. You need to handle your short-term reminiscence utilizing SUMMARY and FILTER to retain helpful content material and filter out noise. Stage 3, built-in inference: The ultimate question arrives. Brokers use RETRIEVE to retrieve from long-term reminiscence and management short-term context to generate solutions.

An essential element is that long-term reminiscence persists throughout all phases, whereas short-term reminiscence is cleared between phases 1 and a couple of. This design forces the mannequin to depend on retrieval moderately than residual context, revealing lifelike long-term dependencies.

Compensation design and tiered GRPO

AgeMem makes use of a gradual variant of Group Relative Coverage Optimization (GRPO). For every activity, the system samples a number of trajectories that kind a bunch. The ultimate reward is calculated for every trajectory and normalized throughout the group to acquire a good sign. This benefit is broadcast to each step within the trajectory, so the ultimate result’s used to coach intermediate instrument choice.

There are three major parts to complete compensation.

Process rewards utilizing LLM judges to attain the standard of solutions between 0 and 1. Contextual rewards measure the standard of short-term reminiscence operations, similar to compression, early summarization, and storage of query-related content material. Reminiscence rewards measure long-term reminiscence high quality, similar to the share of things saved with prime quality, the usefulness of upkeep operations, and the relevance of retrieved gadgets to a question.

Uniform weights are used for these three parts, making certain that every contributes equally to the coaching sign. A penalty interval is added if the agent exceeds the utmost allowed interplay size or if the context overflows the restrict.

Experimental gear and major outcomes

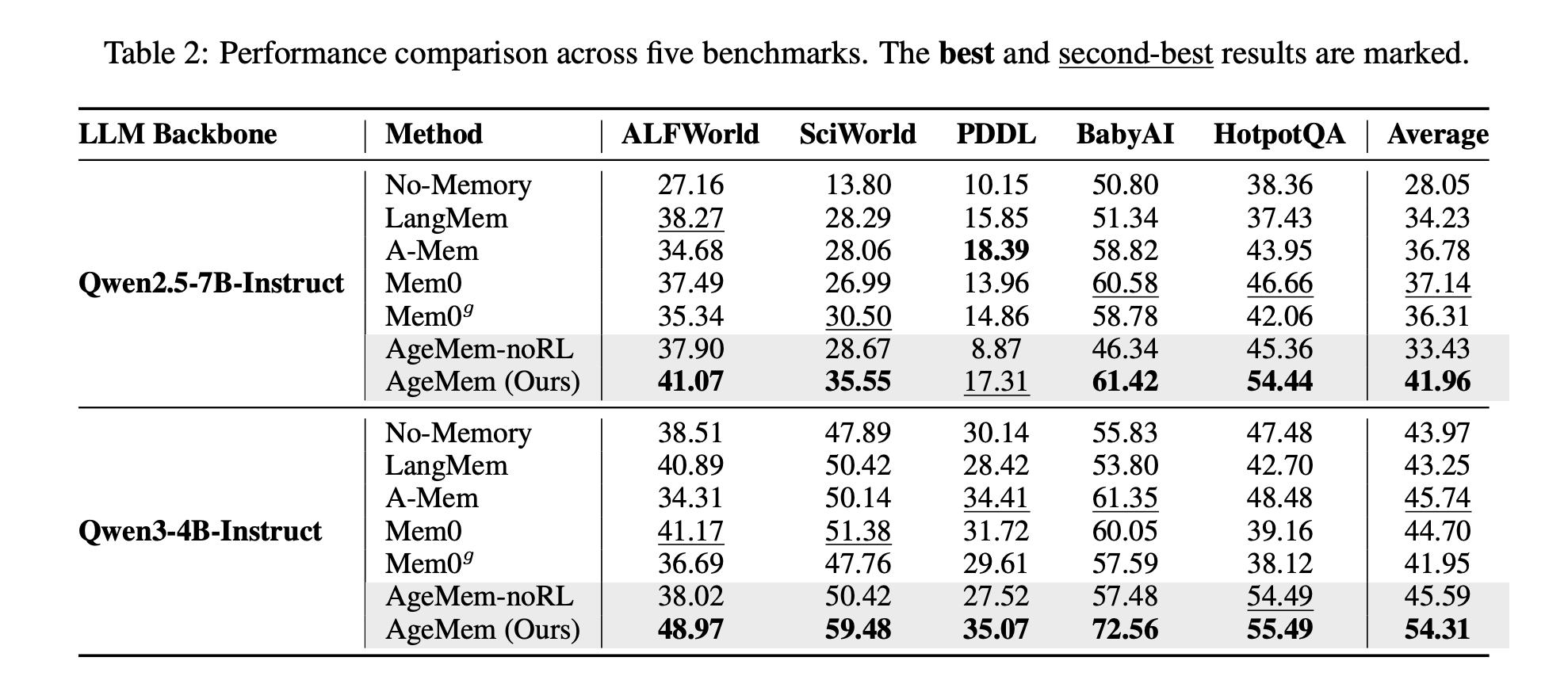

The analysis group fine-tunes AgeMem on the HotpotQA coaching cut up and evaluates it on 5 benchmarks.

ALFWorld for text-based reified duties. SciWorld for a science-themed atmosphere. BabyAI for directions beneath. PDDL duties for planning. HotpotQA for multi-hop query answering.

Metrics embrace success fee for ALFWorld, SciWorld, BabyAI, PDDL activity progress fee, and LLM decide rating for HotpotQA. We additionally outline reminiscence high quality metrics utilizing an LLM evaluator that compares saved reminiscence to HotpotQA’s supporting details.

The baseline contains LangMem, A Mem, Mem0, Mem0g, and a no-memory agent. The spine is Qwen2.5-7B-Instruct and Qwen3-4B-Instruct.

On Qwen2.5-7B-Instruct, AgeMem reaches a mean rating of 41.96 throughout the 5 benchmarks, and the very best baseline, Mem0, reaches 37.14. For Qwen3-4B-Instruct, AgeMem reaches 54.31, whereas for the very best baseline, A Mem, it’s 45.74.

It additionally improves the standard of your reminiscence. On HotpotQA, AgeMem reaches 0.533 on Qwen2.5-7B and 0.605 on Qwen3-4B, which is increased than all baselines.

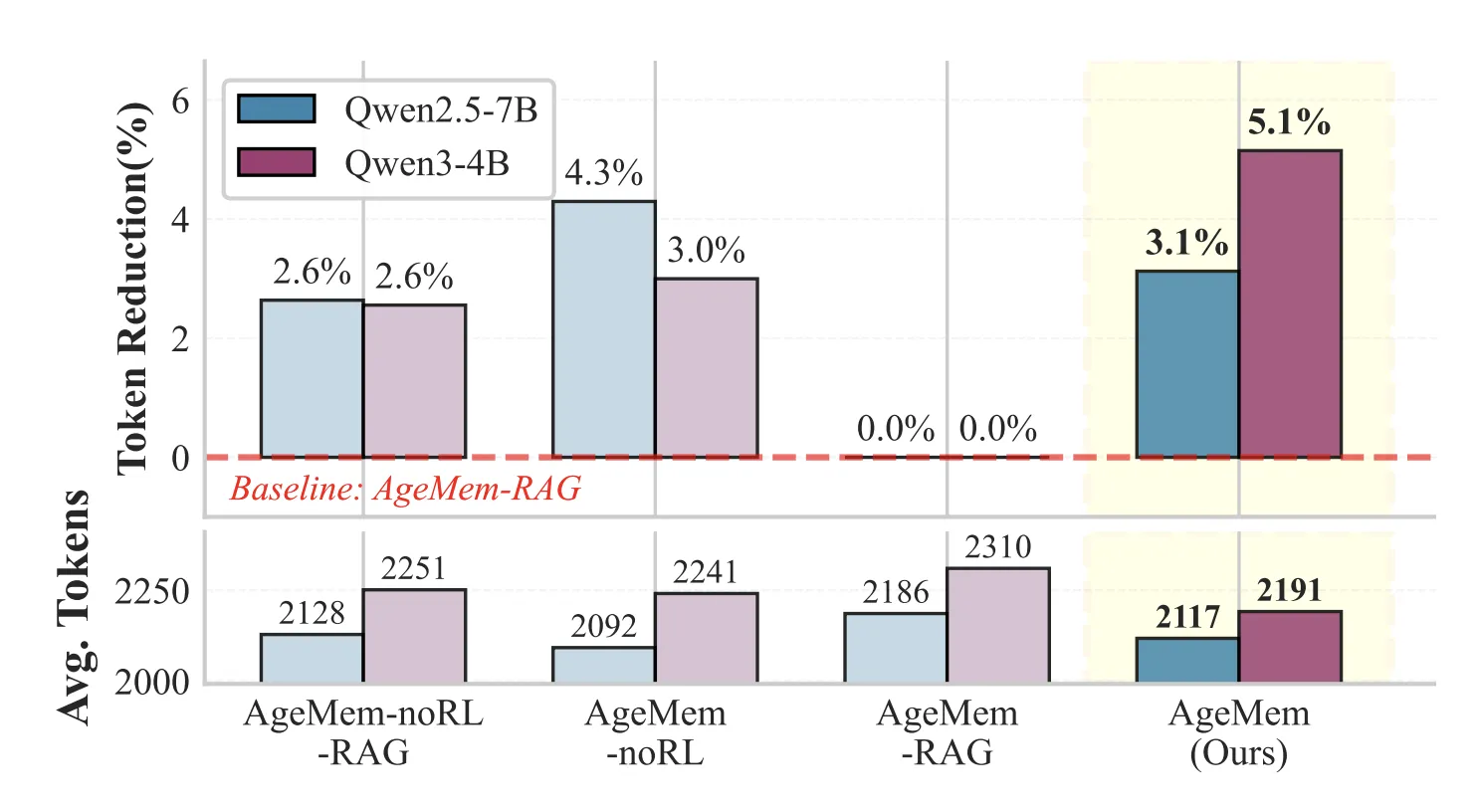

Brief-term reminiscence instruments cut back immediate size whereas sustaining efficiency. In HotpotQA, configurations utilizing the STM instrument use roughly 3 to five p.c fewer tokens per immediate than the variant that replaces the STM instrument with an acquisition pipeline.

Ablation research have confirmed that every part is essential. Including solely long-term reminiscence instruments to a no-memory baseline already yields a transparent enchancment. Including reinforcement studying to those instruments will additional enhance your rating. The entire system with each long- and short-term instruments and RL yields as much as 21.7 share factors enchancment in comparison with SciWorld’s no-memory baseline.

Implications for LLM agent design

AgeMem proposes design patterns for future agent programs. Reminiscence must be handled as a part of the discovered coverage moderately than as two exterior subsystems. By turning storage, retrieval, summarization, and filtering into specific instruments and coaching them together with language era, brokers be taught when to recollect, when to neglect, and how one can effectively handle context over time.

Necessary factors

As a result of AgeMem turns reminiscence operations into specific instruments, the identical insurance policies that generate textual content additionally decide when reminiscence is added, up to date, deleted, retrieved, summarized, and filtered. Lengthy-term reminiscence and short-term reminiscence are collectively skilled by means of a three-stage RL setup. On this setting, long-term reminiscence persists throughout phases, short-term context is reset, and retrieval-based reasoning is pressured. The reward operate is a uniformly weighted mixture of activity accuracy, context administration high quality, and long-term reminiscence high quality, with penalties for context overflow and extreme interplay size. Throughout ALFWorld, SciWorld, BabyAI, PDDL duties, and HotpotQA, AgeMem on Qwen2.5-7B and Qwen3-4B persistently outperforms reminiscence baselines similar to LangMem, A Mem, and Mem0 in common scores and reminiscence high quality metrics. Brief-term reminiscence instruments cut back immediate size by roughly 3 to five p.c in comparison with a RAG-style baseline whereas sustaining or enhancing efficiency. This exhibits that discovered summarization and filtering can substitute hand-crafted context processing guidelines.

View the complete paper right here. Additionally, be happy to observe us on Twitter. Additionally, do not forget to hitch the 100,000+ ML SubReddit and subscribe to our e-newsletter. hold on! Are you on telegram? Now you can additionally take part by telegram.

Take a look at the newest launch of ai2025.dev. It’s a 2025-focused analytics platform that transforms mannequin launches, benchmarks, and ecosystem exercise into structured datasets that may be filtered, in contrast, and exported.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of synthetic intelligence for social good. His newest endeavor is the launch of Marktechpost, a man-made intelligence media platform. It stands out for its thorough protection of machine studying and deep studying information, which is technically sound and simply understood by a large viewers. The platform boasts over 2 million views per 30 days, demonstrating its recognition amongst viewers.